Money AIst

Financial history is rife with large-scale heists, some criminal, others systemic. From Nick Leeson’s 1995 Barings Bank collapse to Bernie Madoff’s 2008 Ponzi scheme, financial scandals have exposed regulatory flaws. More insidious are policy-driven heists, like the 1933 U.S. gold confiscation, which devalued citizens’ holdings before a government-led revaluation, or George Soros’s 1992 Black Wednesday trade that forced a costly devaluation of the British pound. Whether through fraud or policy, history shows how wealth is repeatedly siphoned from the many to the few.

As the first quarter of the Jubilee year is now firmly in the history books, investors are grappling with a harsh reality: the trades that made them seemingly wealthy in 2024, long positions in the Magnificent 7, Bitcoin, and the AI-driven Nasdaq rally, have, over the first 94 days, unravelled into a ‘Money AIst’.

Digging deeper into the year-to-date performance of the now-renamed ‘Maleficent 7’, a nod to its political connotation, it's no surprise that Tesla has been the worst performer among the seven stocks once expected to dominate the world, while META remains the only one almost matching the performance of the S&P 500 year to date.

Return of META (blue line); Microsoft (red line); Apple (green line); Tesla (yellow line); Nvidia (purple line); Amazon (orange line); Alphabet (white line).

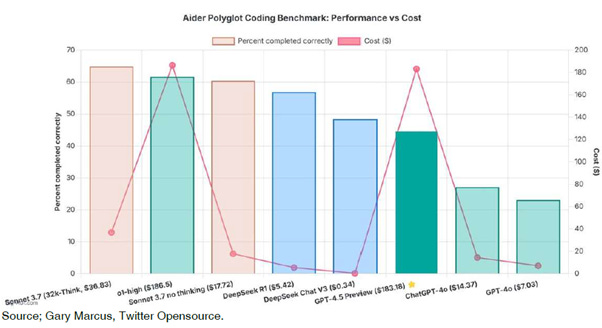

To put back things into context, by November 2024, Sam Altman, the founder of OpenAI which has been presented as the iconic company of the suppose AI revolution, faced a harsh reality: Project Orion, OpenAI’s push for ChatGPT-5, had hit a wall. Scaling LLMs is costly—ChatGPT-3 used 12,288-dimensional word vectors, but each added dimension exponentially increases compute needs with diminishing returns. OpenAI spent 10x more training ChatGPT-4 than 3 for only modest gains. ChatGPT-5 followed the same pattern—25x more compute, $500M per six-month cycle—yet results underwhelmed. Rebranded as ChatGPT-4.5, it still had a 37% hallucination rate, while Baidu’s Ernie 4.5 outperformed it at a fraction of the cost. In a nutshell, Project Orion may rank among AI’s worst investments.

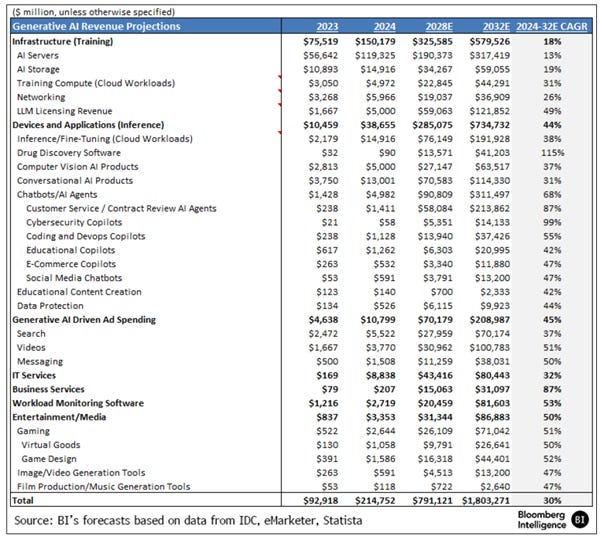

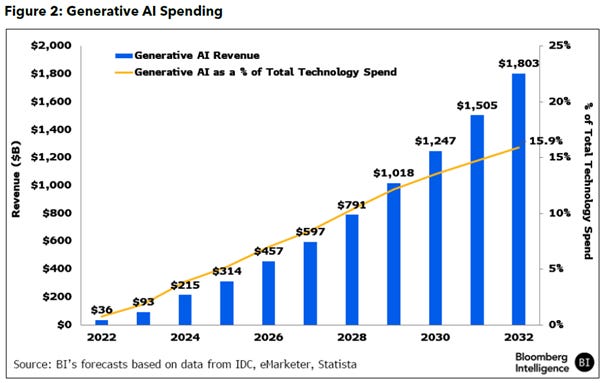

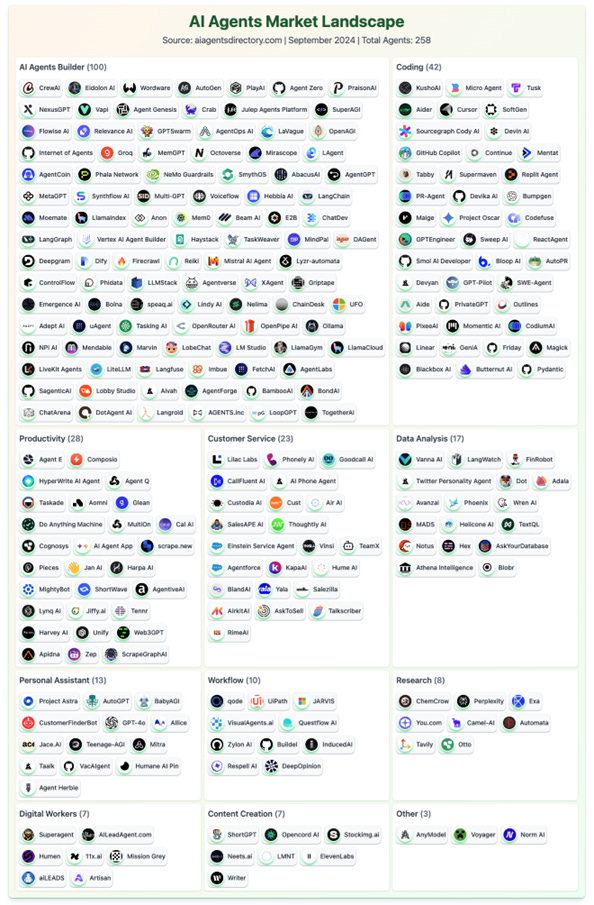

For the time being, training foundational LLMs remains the main revenue driver for generative AI, but traction from GitHub Copilot and new apps like Perplexity and Sora is expanding the market. Generative AI, projected to reach $1.8 trillion by 2032, is growing at a 30% CAGR, boosting sales across hardware, software, services, ads, and gaming. As businesses integrate AI to enhance operations, its share of IT spending could rise from under 2% to 14-16%.

Indeed, as the world is supposedly in the midst of an AI revolution, generative AI spending has apparently become essential for enterprises. Growth is fuelled by ongoing hardware investments, chatbot adoption, and copilot-style subscriptions. Retrieval-augmented generation (RAG) enhances accuracy by leveraging proprietary data. Nvidia’s growth forecasts have surged, while Microsoft expects strong gains from Azure and copilots. On the consumer front, Google Overview launched at scale, and Meta AI now has 700 million MAUs.

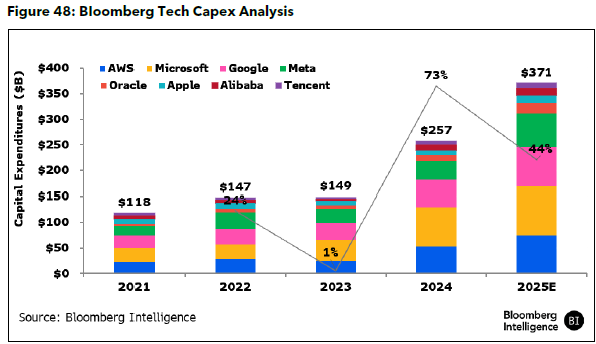

In this context, the much-hyped AI-driven capex boom has been in full swing, with hyperscalers set to spend $340 billion this year, on par with Egypt’s GDP. This frenzy has propped up markets, and recent tech earnings hint at even higher AI spending, pushing 2025 capex to $371 billion, a 44% jump from 2024. Microsoft CEO Satya Nadella’s leasing focus from 2027 suggests a slowdown ahead, but Meta, Google, and Amazon have all pledged aggressive outlays. Meanwhile, SoftBank, OpenAI, and Oracle’s $100 billion commitment for 2025—scaling to $500 billion over four years, could further reshape spending forecasts.

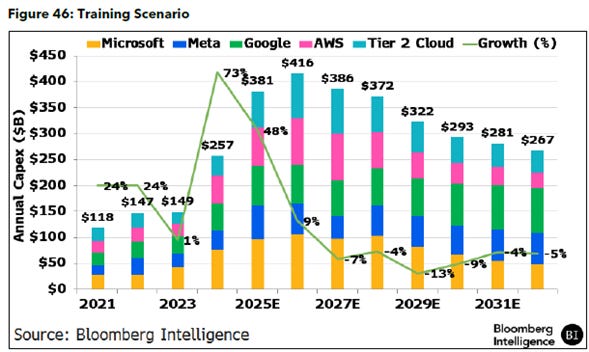

The risk of a capex cliff is higher if hyperscalers continue heavy outlays for training clusters instead of shifting to inference workloads. Alphabet, with its TPUs for both tasks, can adapt faster than Microsoft-OpenAI and Meta, which rely on Nvidia for training. Most will likely optimize inference spending for cost and latency, unlike pre-training, which demands massive compute clusters. A capex slowdown is likely by 2027-28 if firms keep scaling training infrastructure. xAI leads with 100,000+ GPUs, followed by Meta, Microsoft-OpenAI, Alphabet, and Amazon-Anthropic. Training, now 40-60% of hyperscaler capex, may drop to 20-30% by 2032 as spending aligns with revenue.

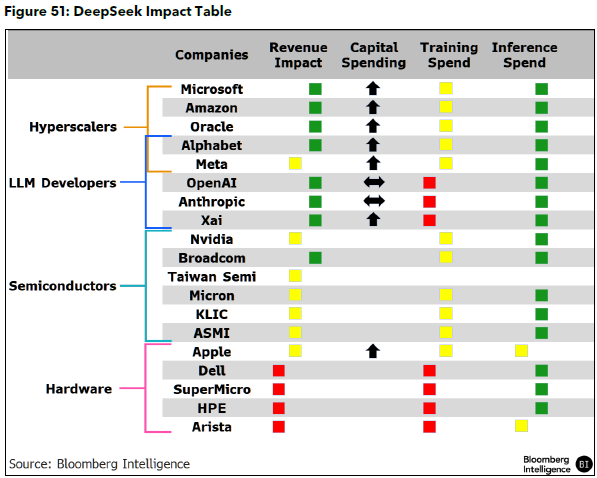

Infrastructure expenditures for generative AI training are expected to be about three times larger than those for inference, driven by server and storage needs. A Bloomberg Intelligence survey shows 75% of US CIOs plan to increase IT infrastructure budgets in 2025, with 38.0% boosting spending by over 11%. Generative AI infrastructure-as-a-service (IaaS) could generate $236 billion in sales over the next decade, with training compute resources leading at $44 billion, and cloud-based inference workloads reaching $191 billion by 2032. Networking may contribute $37 billion. Computer-vision AI products could grow to $64 billion, while conversational AI products may hit $114 billion. AI could add $580 billion to the training market by 2032, up from $75 billion in 2023. In software, generative AI could contribute $446 billion by 2032, growing at 64% annually, benefiting sectors like cybersecurity, drug discovery, and AI assistants. Specialized assistant software may hit $95 billion in sales by 2030, and educational software spending is expected to rise. Generative AI will also accelerate gaming and creative software development, reducing barriers to entry. In digital advertising, AI will enhance targeting and create new formats, adding $210 billion by 2032. In IT and business services, generative AI tools could generate $111 billion in sales, helping companies drive growth and cut costs. Hyperscale-cloud providers are expected to increase capital spending by 40-50% in 2025, bolstered by the Project Stargate data-center build-out and EU funding, extending the cycle. While a shift to smaller distributed clusters could slow capex growth post-2025, sovereign funds may offset this with additional spending. Before the DeepSeek R1 model release, companies planned to build 1-million compute clusters for foundational models, driving hyperscaler capex. However, DeepSeek’s breakthrough in smaller distributed clusters may now limit the scale of training infrastructure.

While everything was looking rosy for the AI hype to prevail for much of the rest of this decade, the classic advice from All the President's Men, "Follow the money", perfectly applies to understanding the value destruction in the LLM AI sector. No major LLM developer, from OpenAI and Anthropic to Google, AWS, Meta, Apple, and others, has a viable business model as of today. OpenAI lost $8 billion on $5 billion in revenue last year, and that's likely an underestimate, as depreciation of its chip holdings is underreported. These chips don't last six years, two years would be optimistic. Anthropic, similarly, lost $1.6 billion on $890 million in revenue. Even Microsoft, selling Copilot to less than 1% of Windows users, is likely operating at similar losses. With no commercial benefit from scaling, 80x more compute for ChatGPT-5 yielding zero return—profits are unlikely to improve. As competitors like DeepSeek and Ernie emerge, many of the 40 companies in the tail are likely to fold within the next year.

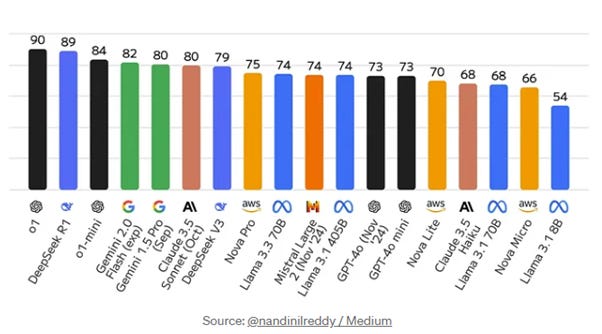

The harsh reality is that foundation models have become commodities. When a Chinese open project like DeepSeek can replicate 90% of GPT-4’s performance at just 2–10% of the cost, it undermines OpenAI's business model of charging high API access fees. It’s difficult to command premium prices for a product when a well-funded competitor offers a similar product essentially for free.

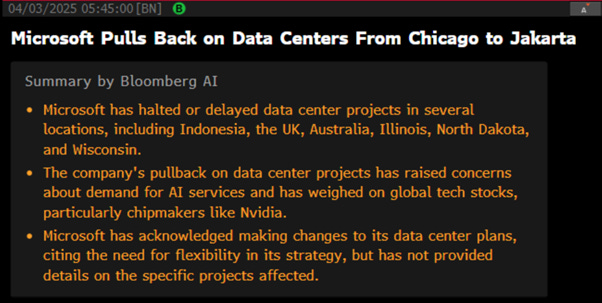

Rather than being mesmerized by sell side forecasts, the dark reality is that after multiple denials, Microsoft chose Liberation Day to confirm that the AI-related data center boom, initially expected by Wall Street banksters to transform the world and drive economic growth to infinity and beyond, was as artificial as the intelligence propagated by language learning programs over the past 18 months.

In the semiconductor industry, the launch of Nvidia’s Blackwell chip has been a disaster. Each chip stack, consuming as much power as 411 homes, presents severe cooling challenges for data centers. Despite Nvidia adjusting cooling specs, heat distribution issues lead to hotspots, risking temporary shutdowns and early obsolescence. Dr. Emily Carter, a data center expert, notes that major players like Microsoft and Amazon have scaled back or canceled their Blackwell orders. Digitimes reported Nvidia's GB200 chip faces early retirement, with the GB300 slated for a smoother launch. Meanwhile, Microsoft recently canceled a $12 billion option with Coreweave, which has become the largest independent LLM AI data center. Coreweave, despite $1.9 billion in 2024 revenue, reported losses of $863 million and $8 billion in debt, with debt payments likely reaching $2 billion this year.

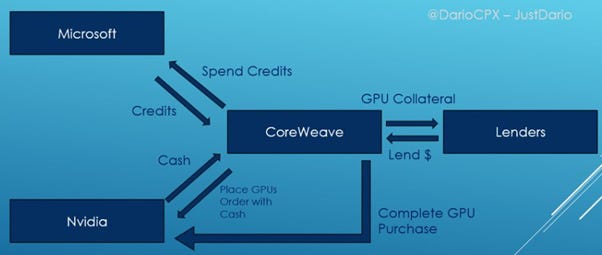

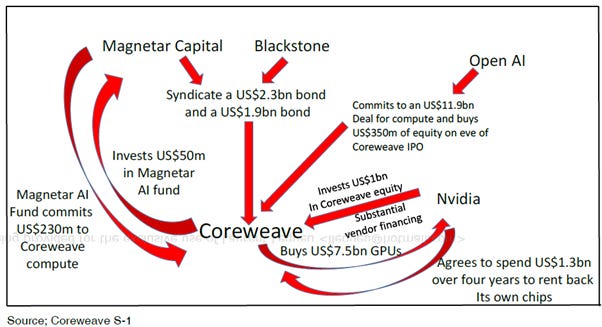

If ever there was a canary in the coalmine for signs of peak bubbliciousness, the CoreWeave IPO serves as a clear signal of peak market exuberance. A spinoff from Nvidia, CoreWeave provides high-performance, GPU-accelerated infrastructure for AI and machine learning, placing it at the heart of the so-called CapEx boom. However, its IPO valuation has been sharply reduced, with the offering size shrinking from $3 billion to $1.5 billion. CoreWeave's rapid revenue growth has been fueled by unsustainable CapEx and cash burn, reminiscent of WeWork's rise. In 2024, the company burned nearly $6 billion in cash, following a $1.1 billion loss the previous year, all due to massive investments in AI infrastructure. Given its close ties to Microsoft, CoreWeave has been at the center of speculation about revenue round-tripping schemes involving Microsoft, Nvidia, and OpenAI.

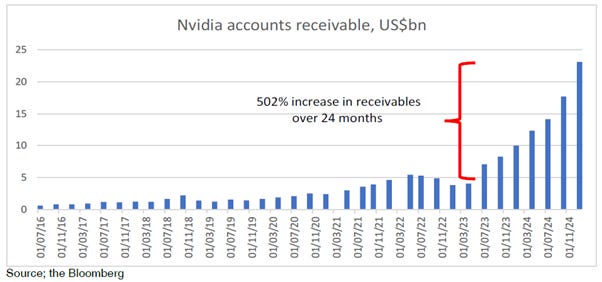

Round-tripping in the AI ecosystem is nothing new, but what it has not been fully emphasized is how problematic it can be. A legitimate business will NEVER engage in round-tripping. A legitimate business aims to generate returns over the cost of capital and focus on accumulating cash. When round-tripping is happening, it's a clear sign that something is amiss. The mildest form of round-tripping is vendor financing. This can be acceptable when the financing is small relative to the business, and when the product's ecosystem is healthy and profitable. However, it's not acceptable when the vendor financing is large, when customers are highly indebted and losing money, or when they repeatedly delay filing financial reports, as Nvidia’s third-largest customer has done. Nvidia's accounts receivable have accelerated by about a quarter relative to revenues over the past six months, which should be a concerning sign to any real Wall Street analysts.

The prevalence of round-tripping in the AI ecosystem is so pervasive that one might wonder if any major transactions in this market don’t involve some form of double-dealing. This raises a significant red flag for the entire industry. The chart below represents the best attempt to illustrate the round-tripping Coreweave is involved in, based on the company's S-1 filing. Full disclosure: this is likely only a partial picture, and there may be errors in the analysis. Some assumptions about the identity of firms whose names have not been fully disclosed have been done, as companies rarely disclose their counterparties unless absolutely compelled to do so.

Read more and discover how to trade it here: https://themacrobutler.substack.com/p/money-aist

If this research has inspired you to invest in gold and silver, consider GoldSilver.com to buy your physical gold:

https://goldsilver.com/?aff=TMB

Disclaimer

The content provided in this newsletter is for general information purposes only. No information, materials, services, and other content provided in this post constitute solicitation, recommendation, endorsement or any financial, investment, or other advice.

Seek independent professional consultation in the form of legal, financial, and fiscal advice before making any investment decisions.

Always perform your own due diligence.